Presentation on Artificial Intelligence (AI) and Machine Learning (ML) by Google AU/NZ Engineering Director Alan Noble, March 22 2017

These recollections are based on my personal notes, mistakes are my mine. My comments added in [square brackets]

Google has shifted focused

“From a mobile first to an AI world”

Sundar Pichai, Google CEO

- Until recently the focus has been on mobile, mainly because of mobile use in emerging markets.

- There are around 1300 people working for Google at their Pyrmont location.

- About ½ are engineers

Voice usage is increasing

[Typing is hard for some on mobile]

Fun fact: On Google OS 20% of all searches are on voice

Artificial intelligence

- Is the science of making things smart

- About making machines smarter

- [I wonder how you determine what is smart, and smart for whom. The individual, the company, the government, society. I can see ethicists are going to be a huge growth field]

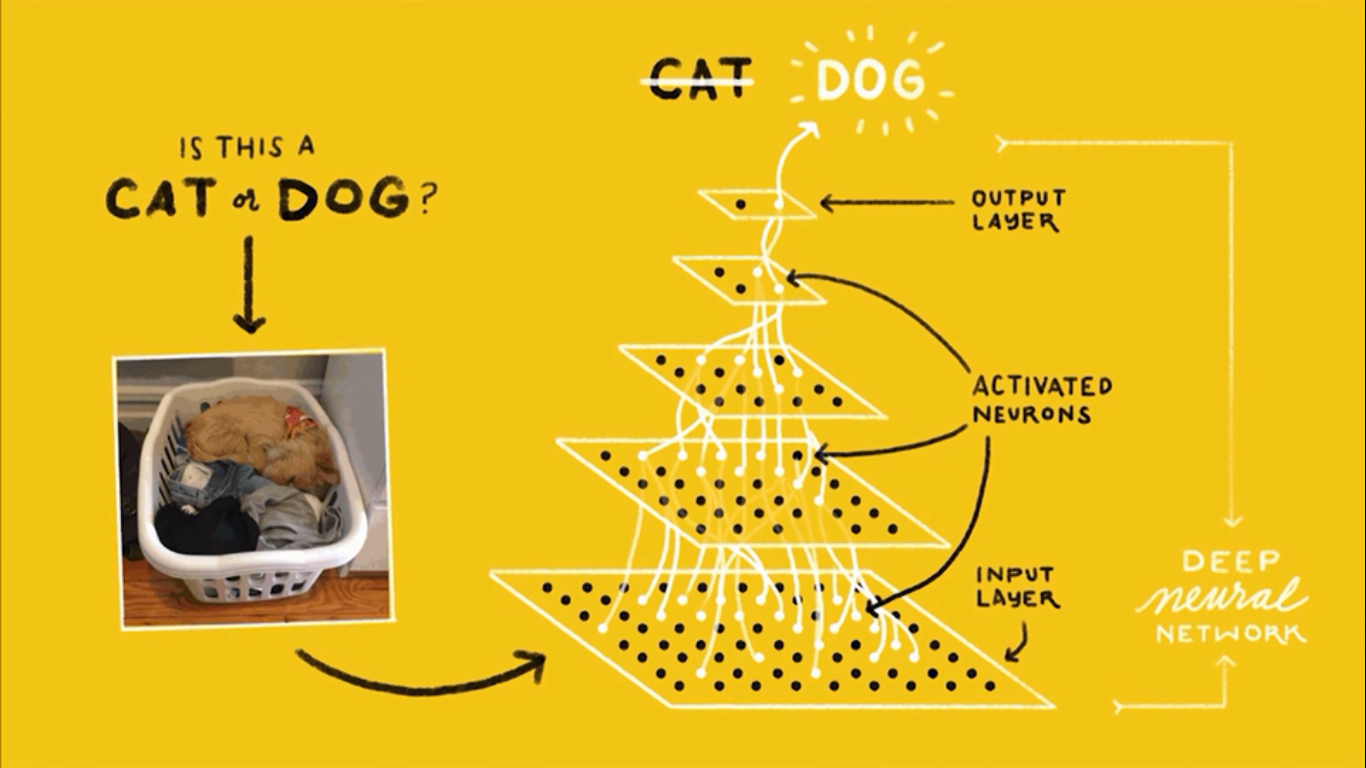

AI is the broader domain with a subset being machine intelligence and within that machine learning.

There has been a shift for rules based machine learning to the use of deep neural networks.

Deep neural networks

- Deep neural networks allow for an abstraction process [to allow machines to make sense with incomplete data presumably]

- [More of an emergent learning style, rather than prescriptive rote learning.]

- Additionally ML is not as brittle as rule based models.

Training sets and a model are needed to support machine learning

You can see it at work in the google photo app. It relies on curated data (e.g. photos tagged dog) to allow formation of a training set. This allows training or supervised learning

Besides a training set a deep neural network requires a model. E.g. identify a type of animal, this is an example of a classification model

What determines quality of ML?

[I think the size of the training sets and quality of the model would be key determinants of the quality of the ML. Over time it should improve.]

I saw an animated version of this at the presentation. I also notice that James Dean (the Chuck Norris of Google) used it here:

Jeff Dean on large scale deep learning at Google | High Scalability, 2016

Translation models

Using a ‘zero shot translation model’ means that one model can be shared across all languages. Reducing the overhead of having language specific models

Machine learning successes

- Machine learning models allowed Google to achieve a 40% reduction in energy used in Google data centres

- Machine Learning has been applied to a number of agricultural purposes for example: sorting cucumbers, Fishface (identifying fishes caught) [other uses might include: best times for crop rotation, moving livestock between pastures, and chicken sexing]

Some things Google is sharing:

- [Tensor meaning a multidimensional array and flow meaning a graph of operations]

- [I think another way to say multidimensional model is n dimensional matrix. I find it easier however to think of basic object orientated computing, with its concepts on an object and its attributes to help me grasp what this means]

Inception – an off the Shelf image recognition tool

AI Experiments with Google - What it says on the tin

Terms of use and data sharing for AI

I think terms of use are an interesting issue for both machine learning but it also applies to any service that makes use of users data.

The biggest assumption made with terms and conditions, product disclosure statements and legal agreements is that they are read and understood. Users don't read them… based on my extensive user research of one user.

I like this site which critiques the terms of use agreements.

There have been a spate of recent articles on this.

“Consumer advocate Choice has shone a light on unreasonably long online contracts, after a review of the Amazon Kindle terms and conditions found the document took almost nine hours to read.

The contract for the popular e-reader amounts to some 73,198 words and takes the average reader eight hours and 59 minutes to read - longer than Shakespeare's Hamlet and Macbeth put together."

“ ‘I worked for a bank, so I know how to read these things, but for the general consumer I find the PDS uses ambiguous language ... and I feel a lot of these insurance companies hide behind the legal jargon,’ he said.

‘I suppose how I'd like to see it as a consumer, is including the legal terms, but then in brackets something in plain language about what they mean.’ “

Thought the suggestion made in the Canberra Times article was interesting.

Additionally for me bots have a lot of potential public good particularly is combined with behavioural economics and neuroscience understanding of default human behaviours.

Unfortunately like always political, and criminal elements. See Future Crime (not that I am relating the two) have more motivation and funding to make use of them effectively.

Have a read of the Scout article on the AI Propaganda machine used in the recent American elections.

[The rise of weaponized AI propaganda machine – Medium 13 Feb 2017]((https://medium.com/join-scout/the-rise-of-the-weaponized-ai-propaganda-machine-86dac61668b)

Covers things like Dark ads that only appear specific users to validate and amplify opinions. Fake accounts and sock puppeting to support world views that may not reflect reality. These are dark side uses of deep machine learning and bots.

Finally Alan also reference this article on machine learning and AI which I found useful as layperson primer

The great A.I Awakening – New York Times Magazine 14 December 2016

I am encouraged to see folks starting to get on board with using machine learning for public good.